By Deepa Seetharaman

Facebook Inc. has spent more than a decade building an efficient

machine to analyze and monetize the content on its platform. Now,

after years of neglect, the social-media giant is throwing more

resources at defending its platform from bad actors.

The annual budget for some of Facebook's content-review teams

has ballooned by hundreds of millions of dollars for 2018,

according to people familiar with the figures. Much of the

additional outlay goes to hiring thousands of new content

moderators, they said. Facebook says it is hiring 10,000 people --

including staffers and contractors -- by the end of the year to

work on safety and security issues including content review,

roughly doubling the total in place this past fall.

Facebook also plucked two executives from its respected growth

team to oversee its expansion of content-review operations and to

build technical tools that help measure the prevalence of hate

speech and track how well its moderators uphold its content rules,

the company says. The company outlined some of those measures in a

blog post Tuesday.

The moves reflect Facebook's increased focus on stamping out

graphic violence, hate speech, fake accounts and other types of

objectionable posts that have marred the social-media giant's image

and drawn the ire of regulators world-wide in the past 18

months.

Facebook also is contending with a backlash over how it handles

user data. The company is examining tens of thousands of apps that

previously had access to its user data to determine if there were

instances of misuse. On Monday, it said it had suspended 200 apps

so far for suspicion of misusing data.

Intensifying scrutiny of content on Facebook has compelled top

executives including Chief Executive Mark Zuckerberg to re-evaluate

the resources it has given to content review, which in the past

have been a small fraction of those allocated to promoting new

tools and products.

For 2016, Facebook allocated roughly $150 million to its

community operations division, which oversees content moderators,

according to people familiar with the figures. That year, in a

single product initiative, Mr. Zuckerberg approved a budget of more

than $100 million to pay publishers to put more live videos on

Facebook, The Wall Street Journal previously reported.

After the 2016 U.S. presidential race, Facebook was criticized

for failing to detect the manipulation of its platform. The

live-video product also drew increasing scrutiny because people

were using it to broadcast crimes and acts of violence. For 2017,

Facebook increased the community operations team's budget by almost

50% to $220 million, one person familiar with the figures said.

Facebook last year reported net income of $15.93 billion on

$40.65 billion in revenue.

Community operations and the community-integrity team -- which

builds automated tools that let users flag problematic content and

help content reviewers sift through user reports -- last year

requested a combined 2018 budget of $770 million, the person

familiar with the figures said.

Mr. Zuckerberg said they weren't asking for enough and pushed

the teams to accelerate their growth plans, another person familiar

with the situation said. He ultimately gave them more money than

requested, the person said. The final amount couldn't be

learned.

Facebook executives say they are making up for lost time.

"As we built Facebook in the early years, this was something

that frankly didn't get enough attention," said Guy Rosen, vice

president of product management. Mr. Rosen, a longtime executive on

Facebook's growth team, took on oversight of the

community-integrity team, previously called protect and care, after

the 2016 election.

In the past few years, "everyone has come to realize that we

really need to over-invest in this," Mr. Rosen told reporters last

week. "This is the top priority for the company."

Facebook remains outgunned in many ways. Its services are

offered in more than 100 languages, but its content review teams

speak a little more than 50. That means problematic posts continue

to thrive, especially in developing markets in Southeast Asia,

where Facebook has limited language expertise.

Rapid change also has caused high turnover among Facebook

content moderators in some cities, people familiar with the matter

say.

A Facebook spokeswoman said it regularly surveys workers and has

found that a number of factors affect retention rates in those

operations.

For years, many Facebook employees didn't consider working on

content issues as prestigious as being part of the growth, news

feed and advertising teams, current and former employees say.

Executives, particularly on the business and finance side, viewed

content review as a "pure cost center," one person said.

Mr. Rosen's new role was a sign that Facebook was starting to

take content review seriously. Mr. Zuckerberg often turns to

members of Facebook's growth team to help crack tough problems that

he sees as a priority, according to current and former

employees.

Over the course of 2017, the community operations and community

integrity teams started working more closely, with Mr. Rosen's team

leading the charge.

Some of Facebook's additional spending is expected to go to

hiring engineers and developing artificial-intelligence software

that can automatically detect problematic content -- an emerging

technology that Mr. Zuckerberg repeatedly touted in his appearance

before Congress last month. Facebook says it has successfully used

A.I. software to uproot terror-related posts.

For many other kinds of content, including hate speech and

determining whether an ad requires a political disclosure, Facebook

still needs humans to do the work.

Many of those workers will be based out of Facebook's current

offices in Dublin, Ireland; Austin, Texas; and Menlo Park, Calif.

But Facebook also is hiring in other U.S. cities in Florida and

Texas, as well as overseas in places like Casablanca, Morocco,

through staffing agencies and contractors, people familiar with the

matter said.

The outside agencies that manage the workers measure several

aspects of performance, including how quickly reviewers get to

newly filed complaints and how closely they follow Facebook's

content rules. Those who fall short are warned, irritating some

content reviewers who say Facebook's performance expectations are

constantly shifting.

Write to Deepa Seetharaman at Deepa.Seetharaman@wsj.com

(END) Dow Jones Newswires

May 15, 2018 10:22 ET (14:22 GMT)

Copyright (c) 2018 Dow Jones & Company, Inc.

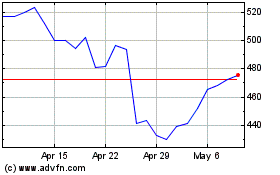

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Aug 2024 to Sep 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Sep 2023 to Sep 2024