Facebook to Start Taking Down Posts That Could Lead to Violence

July 18 2018 - 9:28PM

Dow Jones News

By Deepa Seetharaman

Facebook Inc. said Wednesday it will start removing

misinformation that could spark violence, a response to mounting

criticism that the flow of rumors on its platform has led to

physical harm to people in countries around the world.

The new policy is a shift in Facebook's broader approach to

misinformation, which until now has been focused on suppressing its

popularity on the platform without scrubbing the problematic

content entirely. But the company has also faced more questions

about the platform's role as a vector for false information that

can inflame social tensions.

The company will rely on local organizations of its choosing to

decide whether specific posts contain false information and could

lead to physical violence, company officials said. If both hold

true, the posts will be taken down.

A Facebook spokeswoman said the company will implement the new

policy first in Sri Lanka and later in Myanmar, two countries where

some people and groups have used Facebook to spread rumors that

ultimately lead to physical violence. The attacks in those

countries have garnered significant media attention.

"There were instances of misinformation that didn't violate our

distinct community standards but that did contribute to physical

violence in countries around the world," said Tessa Lyons, a

product manager on Facebook's news feed, citing Sri Lanka and

Myanmar specifically. "This is a new policy created because of that

feedback and those conversations."

The new policy raises a number of questions that company

officials said it is too early to answer, including who its

partners will be and what the criteria is for becoming one. A

Facebook spokeswoman said she couldn't provide a list of

organizations Facebook plans to team up with or countries where

they could deploy this new policy.

It also isn't clear how those partners will determine whether or

not content is false or could lead to violence. Nor was it clear

how Facebook would ensure those organizations remain independent or

relatively free from political bias.

Ms. Lyons said Facebook was in the early stages of creating

these policies and didn't have details to share publicly. In an

interview, she said Facebook will rely on outside organizations'

judgment because they have "local context and local expertise."

Facebook has relied on third-party organizations to help it

navigate other thorny issues in the past. In December 2016, while

facing mounting pressure for allowing misinformation to proliferate

on the platform during the U.S. election, Facebook said it would

team up with fact-checking organizations in the U.S. to help

suppress false news reports on the platform. The organizations

determine which claims are true and false. If enough organizations

say it's false, Facebook will lower the rank of the posts.

The social-media company has struggled to address criticism that

its content policies and enforcement muscle fail to mitigate social

harm caused by misinformation, some of which includes physical

violence. Chief Executive Mark Zuckerberg has said it is Facebook's

responsibility to manage the downsides of its platform.

Earlier this month, India's government rebuked the

Facebook-owned messaging service WhatsApp for allowing rumors and

false reports to circulate on its service after a series of deadly

attacks on victims mistakenly accused of kidnapping children.

Over the past week, Facebook officials have faced repeated

questions from lawmakers and reporters about why the company allows

InfoWars, a site that has spread discredited conspiracy theories

about school shootings and other issues, to remain on the site. On

Tuesday, a company official said at a congressional hearing that

InfoWars hadn't yet met a threshold required to remove the page

from Facebook, without explaining what the threshold is.

In an interview with Recode published Wednesday, Mr. Zuckerberg

sparked more controversy when he said Holocaust denial should be a

protected form of speech on Facebook. "I don't believe that our

platform should take that down because I think there are things

that different people get wrong," he said. "I don't think that

they're intentionally getting it wrong."

Jonathan Greenblatt, the chief executive of the Anti-Defamation

League, said this speech causes harm. "Holocaust denial is a

willful, deliberate and longstanding deception tactic by

anti-Semites that is incontrovertibly hateful, hurtful, and

threatening to Jews," Mr. Greenblatt said in a statement. "Facebook

has a moral and ethical obligation not to allow its

dissemination."

Write to Deepa Seetharaman at Deepa.Seetharaman@wsj.com

(END) Dow Jones Newswires

July 18, 2018 21:13 ET (01:13 GMT)

Copyright (c) 2018 Dow Jones & Company, Inc.

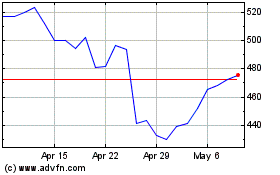

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Apr 2024 to May 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From May 2023 to May 2024