By Niharika Mandhana and Rhiannon Hoyle

Facebook Inc. left a gruesome video of shootings at a New

Zealand mosque up on its site for half an hour after a user brought

it to the company's attention, a previously unreported delay that

highlights the social media company's difficulty in controlling

violent content on its platform.

The timetable, based on times supplied overnight by Facebook and

the New Zealand police, means the video showing men, women and

children being gunned down at a mosque in Christchurch was

available on Facebook's site for an hour from the time it started

rolling to the time it was ultimately removed.

The live-streaming video was taken by the shooter as he began a

rampage that left 50 people dead Friday afternoon. The broadcast

began on Facebook at around 1:33 p.m. and ended 17 minutes later at

approximately 1:50 p.m. A Facebook user flagged the post to the

company 12 minutes after that, Facebook said. The social media team

of the New Zealand police alerted Facebook to the video at 2:29

p.m., police told the Journal. Facebook said it took the video down

within minutes thereafter.

New Zealand police said the timeline was approximately correct.

Facebook declined to comment.

The delay was the result of a string of gaps in systems Facebook

has set up to stop violent videos and other objectionable content

from appearing on its platform. Artificial intelligence programs

the company relies upon to block bad content failed to catch the

video during its live broadcast and afterward. Then the alert from

the user, who flagged the video after the broadcast ended, wasn't

fast-tracked, because Facebook prioritizes warnings about suicide

attempts in such cases.

By the time the video was taken down, it had been viewed 4,000

times on the site, Facebook said. It was copied millions of times

on Facebook and other sites beyond Facebook's control.

Hany Farid, a professor of computer science at Dartmouth

College, said the company for years focused on expanding

aggressively and unleashing potent new products without putting the

necessary protections in place beforehand.

"The problem is that there are just no safeguards," Mr. Farid

said. "The company can't say these are unintended or unforeseen

consequences. These were foreseeable."

Facebook has said it is doing more to moderate the large numbers

of posts that go online. It has doubled down on AI as a solution,

in addition to using some 15,000 human content reviewers. In a post

on the company's website Wednesday, Guy Rosen, Facebook's vice

president for integrity, acknowledged limitations in the company's

handling of live broadcasts and said the company's artificial

intelligence tools hadn't been able to catch the video.

"We recognize that the immediacy of Facebook Live brings unique

challenges," he wrote in his post on the company's website.

The killings last week left New Zealand reeling. Police have

charged Australian Brenton Tarrant with murder in the case, saying

he mowed down worshipers who had gathered for Friday prayers at two

mosques. He hasn't entered a plea.

The social media giant has come under fire in many parts of the

world--from Myanmar to Sri Lanka--for failing to take sufficient

action against hate speech, fake news and misinformation on its

site. The problems are amplified with Facebook Live, the video

broadcast tool it introduced in 2016. Facebook said users post

millions of live videos a day. In the past, they have included live

beatings and murders.

Australian Prime Minister Scott Morrison asked over the weekend

whether Facebook should be allowed to offer services such as live

videos if it can't control them.

Some critics have called on the site to impose a time delay

during which videos could be checked. Mr. Rosen said a delay would

be swamped by the high volume of live videos broadcast daily. He

also said it would slow reports by users that could enable

authorities to provide help on the ground.

Facebook has touted the success of its technical tools in

tackling some kinds of terrorist content. The company said last

year that Facebook itself--not its users--was identifying nearly

all of the material related to Islamic State and al Qaeda that it

removed. But the platform, accessed each month by some 2 billion

users posting in more than 80 languages, hasn't had that success

with other kinds of extremist content.

In his post, Mr. Rosen said artificial intelligence has worked

well to identify nudity and terrorist propaganda, because there are

lots of examples of such content to train the machines. But the AI

didn't have enough material to learn to recognize content like the

mosque massacre, he said, because "these events are thankfully

rare."

Once the video was flagged by a user, it appeared to get stuck

in queue to be reviewed. Mr. Rosen said the social media site

accelerates its review of all live videos that are flagged by users

while they are still being broadcast. The policy also applies to

videos that have recently ended, but only in the case of suicide

reports. The Christchurch video wasn't reported as a suicide and as

a result wasn't prioritized.

The company will re-examine its procedures and expand the

categories for accelerated review, Mr. Rosen said.

Mr. Farid, the Dartmouth professor, said the delay in taking the

video down even after it was flagged by a user shows Facebook's

legions of content checkers aren't enough for a platform of

Facebook's size.

Some of New Zealand's largest advertisers, including ASB Bank,

suspended advertising on Facebook and Google after the shooter's

video was posted.

Lindsay Mouat, the CEO of the Association of New Zealand

Advertisers, said New Zealand corporations "are just appalled with

the way that live video streaming of what happened could actually

get up and occur in Facebook Live without any moderation."

Facebook's systems caught 1.2 million videos of the attack as

they were being uploaded in the first 24 hours; another 300,000

made it to the site and were removed afterward. But users were able

to make copies of the video on other sites to keep it alive.

Mr. Rosen said a user of the website 8chan, which is often home

to white supremacist and anti-Muslim content, posted a link to a

copy of the video on a file-sharing site from where it spread more

broadly.

Facebook struggled to keep the video from reappearing on its own

site, he said, because a "core community of bad actors" worked to

continually re-upload edited versions to defeat the company's

detection tools. Facebook found and blocked more than 800 variants

of the video that were circulating, he said.

Write to Niharika Mandhana at niharika.mandhana@wsj.com and

Rhiannon Hoyle at rhiannon.hoyle@wsj.com

(END) Dow Jones Newswires

March 21, 2019 11:00 ET (15:00 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

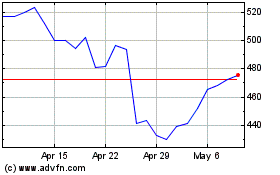

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Mar 2024 to Apr 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Apr 2023 to Apr 2024