By Deepa Seetharaman

Facebook Inc. is reviewing how it handles objectionable content

after a Cleveland man posted a video of a murder on the site,

sparking outrage over the social-media giant's failure to more

closely monitor violence on its platforms.

On Sunday afternoon, 37-year-old Steve Stephens posted two

videos on Facebook, one announcing his intent to commit murder and

a second video of himself allegedly approaching an older man and

shooting him in the head. About 10 minutes later, he went on

Facebook Live, an instantaneous broadcasting tool, to talk about

this and other alleged crimes.

Mr. Stephens started posting the videos on Facebook at 2:09 p.m.

Eastern time, according to Facebook. Facebook received its first

report about the second video, containing footage of the shooting,

around 4 p.m., and the company removed Mr. Stephens' Facebook page

around 4:22 p.m., more than two hours after he started posting the

videos.

In a blog post Monday, Justin Osofsky, Facebook's vice president

of global operations, acknowledged that its content review process

is flawed and that it wants to improve how it allows users to flag

objectionable content to Facebook. Mr. Osofsky said Facebook is

also looking into how the company manages and prioritizes the

reported content.

"As a result of this terrible series of events, we are reviewing

our reporting flows to be sure people can report videos and other

material that violates our standards as easily and quickly as

possible," Mr. Osofsky said.

The video is among the most extreme examples of Facebook's video

tools being used to promote or showcase violence. It revives

questions about Facebook's readiness to handle sensitive or violent

content broadcast live on its platform, the subject of a Page One

article in the Journal last month.

"This is a horrific crime and we do not allow this kind of

content on Facebook," a Facebook spokeswoman said in a statement.

"We work hard to keep a safe environment on Facebook."

The video was posted two days before Facebook's annual developer

conference called F8, during which Chief Executive Mark Zuckerberg

and other executives will showcase the new features and tools it

plans to introduce to its nearly two billion users. The conference

will kick off with keynote remarks from Mr. Zuckerberg, during

which he might address the violence on the platform.

According to a tally by The Wall Street Journal, people have

used Facebook Live to broadcast more than 60 sensitive videos,

including murder, suicides and the beating in January of a mentally

disabled teenager in Chicago.

Facebook has strict standards prohibiting using its site to

promote violence, and employs content moderation teams to check for

violations.

Facebook relies on thousands of contractors world-wide to review

content, but live-video reports are handled by a small, specialized

team of contractors in the Bay Area who work around the clock in

eight-hour shifts, the company confirmed. Current and former

employees at Facebook said its content-moderation teams were

trained to remove offensive or violent live videos, such as

beheadings and pornography.

If a video hits a certain number of concurrent views, it is

automatically sent to the contractors for review, according to

people familiar with the matter and Facebook.

But content moderation experts say it is difficult for tech

companies to uphold their standards because of the sheer amount of

content posted online every day and the comparatively small number

of content reviewers. Facebook is developing technology to detect

violent and objectionable content, but understanding what's

happening in a video is still complicated for software.

"Because these processes cannot be easily and reliably automated

-- particularly those videos that are running as live streams --

there is no reason to think that people will not continue to find

terrible ways to use the platform," said Sarah T. Roberts, an

assistant professor of information studies at the University of

California, Los Angeles and an expert on content moderation.

"The question that I have is why these consequences were not

adequately weighed before the rollout of the Facebook Live tool,"

Ms. Roberts added.

Facebook previously told the Journal that it thought "long and

hard" about what people might share on live video, including

shocking or traumatic videos.

Facebook used its F8 conference to celebrate the recent global

rollout of Facebook Live, which allowed its users to broadcast in

real time. Facebook started promoting video on its platform in

2014.

Mr. Zuckerberg made Facebook Live his priority in early 2016 and

directed employees to get the product ready for global consumption

in two months. The rapid rollout reflected the common approach of

product development in Silicon Valley: ship it out and work out the

kinks along the way.

The Facebook spokeswoman said the company is "in touch with law

enforcement in emergencies when there are direct threats to

physical safety."

Typically, Facebook will share basic records with law

enforcement, including name, recent logins and credit-card

information, under subpoena. It will share more, including IP

addresses, if it receives a valid court order.

Facebook requires a search warrant to hand over what it calls a

"neoprint," which includes private messages, photos, videos and

other information.

U.S. law generally shields internet companies from liability for

material posted on their platforms. But in recent months, Mr.

Zuckerberg has said that while Facebook only builds tools for

people to communicate, it bears some responsibility for how its

technology is used.

Write to Deepa Seetharaman at Deepa.Seetharaman@wsj.com

(END) Dow Jones Newswires

April 17, 2017 19:28 ET (23:28 GMT)

Copyright (c) 2017 Dow Jones & Company, Inc.

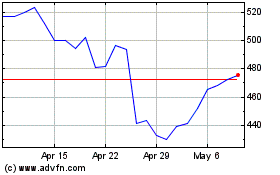

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Mar 2024 to Apr 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Apr 2023 to Apr 2024