Hours after the December shootings in San Bernardino, Calif.,

Mark Wallace asked his employees at the nonprofit Counter Extremism

Project to comb social media for profiles of the alleged

attackers.

They failed. A team at Facebook Inc. had already removed a

profile for Tashfeen Malik, after seeing her name in news

reports.

The incident highlights how Facebook, under pressure from

government officials, is more aggressively policing material it

views as supporting terrorism. The world's largest social network

is quicker to remove users who back terror groups and investigates

posts by their friends. It has assembled a team focused on

terrorist content and is helping promote "counter speech," or posts

that aim to discredit militant groups like Islamic State.

The moves come as attacks on Westerners proliferate and U.S.

lawmakers and the Obama administration intensify pressure on

Facebook and other tech companies to curb extremist propaganda

online. Top U.S. officials flew to Silicon Valley on Jan. 8 to

press their case with executives including Facebook Chief Operating

Officer Sheryl Sandberg. Last week, Twitter Inc. said it suspended

125,000 accounts associated with Islamic State.

Tech companies "have a social responsibility to not just see

themselves as a place where people can freely express themselves

and debate issues," Lt. Gen. Michael Flynn, who ran the U.S.

Defense Intelligence Agency from 2012 to 2014, said in an

interview.

Facebook's new approach puts the company in a tight spot,

forcing it to navigate between public safety and the free-speech

and privacy rights of its nearly 1.6 billion users.

After the Jan. 8 meeting, the Electronic Frontier Foundation, a

nonprofit privacy organization, urged Facebook and other tech

companies not to "become agents of the government."

Facebook said it believes it has an obligation to keep the

social network safe.

Political winds have shifted since the 2013 disclosures by

former National Security Agency contractor Edward Snowden about

government surveillance. Then, Facebook assured users that the U.S.

government didn't have direct access to its servers and started

regularly reporting the volume of government requests for user

data. The enhanced push against extremist content is a separate

initiative.

Leading Facebook's new approach is Monika Bickert, a former

federal prosecutor whose team sets global policy for what can be

posted on the social network.

Facebook takes a hard line toward terrorism and terrorists, she

said. "If it's the leader of Boko Haram and he wants to post

pictures of his two-year-old and some kittens, that would not be

allowed," said Ms. Bickert, Facebook's head of global policy

management.

Facebook relies on users to report posts that violate its

standards, such as images that "celebrate or glorify violence."

After an attack, it scours news reports or asks police agencies and

activists for names so it can remove suspects' profiles and

memorialize victims' accounts.

Facebook has strengthened the process over the past year. The

social network says it uses profiles it deems supportive of

terrorism as a jumping-off point to identify and potentially delete

associated accounts that also may post material that supports

terrorism.

Executives say they began this fanning-out process, which they

use only in terrorism cases, about a year ago after consulting with

academics and experts who said terrorists typically operate in

groups. The searches are conducted by a multilingual team—part of

the community operations group that reports to Ms. Bickert—which

examines the events people have attended or pages they have

"liked," among other things.

In some cases, Ms. Bickert consults Facebook lawyers about

whether a post contains an "imminent threat." Facebook's legal team

makes the ultimate decision about whether to notify law

enforcement. Simply posting praise of Islamic State may not fit the

bill.

Neither Facebook nor law-enforcement agencies would discuss in

detail how closely they cooperate, in part to avoid tipping off

terror groups, they said. Facebook also wouldn't discuss the

criteria it uses to determine what material supports terrorism and

how many terror experts it has hired.

Some counterterrorism experts say Facebook shouldn't quickly

delete user accounts, so police can monitor them and possibly snare

others. But Ms. Bickert, who worked on public corruption and

gang-related violence cases as a prosecutor, said leaving up

terrorist messages could cause harm.

The company is also helping activists who try to discredit

organizations like Islamic State with counter-propaganda. That

includes lessons on how to create material more likely to be shared

and go "viral," said Erin Saltman, a senior counter-extremism

researcher for the Institute for Strategic Dialogue. Facebook also

offers ad credits worth hundreds of dollars to groups to defray the

cost of testing their campaigns.

Facebook's new approach wins plaudits from some academics and

activists, who say the company is more helpful than other tech

firms. Mr. Wallace of the Counter Extremism Project, a former U.S.

ambassador to the United Nations, calls Facebook "the social-media

company that's on the greatest trajectory to be a solution to the

problem."

Some find Facebook's changes more rhetorical. David Fidler,

professor of law at Indiana University, attended a recent panel at

the U.N. featuring Ms. Bickert and other tech executives. He said

the executives offered few specifics beyond "our terms of service

don't have a place for terrorism."

Ms. Bickert said the U.N. meetings made her realize Facebook

needed to be more public about its efforts.

In Washington, the House in December passed a measure that

requires the Obama administration to devise a strategy to combat

terrorists' use of social media. A Senate proposal would extend to

terrorism a 2008 law that requires Facebook and other tech

companies to report content related to child pornography to law

enforcement.

Some see parallels between the two areas. In 2009, when tech

companies were struggling with how to deal with child pornography,

Microsoft and Hany Farid, a computer science professor at Dartmouth

College, developed a tool to identify child-porn images and prevent

users from sharing them. Facebook uses the tool.

Mr. Farid said the approach could be adapted to create a

database of catchphrases or hashtags used by terrorism supporters.

Facebook and other experts say the two problems are different.

"When it comes to removing support of terrorism, the work is

highly contextual," said Ms. Bickert.

Write to Natalie Andrews at Natalie.Andrews@wsj.com and Deepa

Seetharaman at Deepa.Seetharaman@wsj.com

(END) Dow Jones Newswires

February 11, 2016 20:35 ET (01:35 GMT)

Copyright (c) 2016 Dow Jones & Company, Inc.

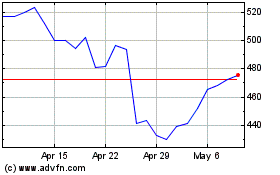

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Aug 2024 to Sep 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Sep 2023 to Sep 2024