By Sara Castellanos

Companies are looking to supercharge corporate decision making

through artificial intelligence, but first they need help preparing

the troves of customer and business data they have acquired over

the years.

Morgan Stanley, for example, has set up a Data Center of

Excellence to help address the ongoing deluge and complexity of

enterprise data and to help develop artificial intelligence

applications that rely on high-quality data.

About 30 experts specializing in data architecture,

infrastructure and governance are acting as data advisers to

different business and technology divisions within the bank, partly

to ensure AI and other applications are being built with the right

data.

"The reason we have the Center of Excellence is we want to

continue to build on AI, and we understand this is one of the

foundational areas that is needed," said Katherine Wetmur, head of

quality assurance and production management, who is transitioning

into the role of international chief information officer. Ms.

Wetmur works with the team to develop services across business

units and oversees separate data-related projects.

Morgan Stanley recently posted a profit of $2.4 billion, or

$1.39 a share, on revenue of $10.3 billion. Both are lower than the

same period a year earlier, when the firm earned $2.7 billion, or

$1.45 a share, on record quarterly revenue of $11.1 billion.

Created last year with staffers spread across New York and

London, the Data Center of Excellence works with the bank's various

business and infrastructure divisions to establish best practices

and controls around data quality and data security.

The data group is working closely with Morgan Stanley's AI team

to make sure AI algorithms in areas such as commercial real-estate

loan analysis, fraud detection and virtual wealth management

advisory services are using the appropriate data. "We want to make

sure we have high-quality data, because your insights are only as

good as the data that underpins it," said Gez Hester, head of the

Data Center of Excellence.

High-quality data is data that's accurate, up-to-date and

complete, Mr. Hester said. To achieve that, the data team must

catalogue all the data that's available in, for example, the

commercial real-estate loan business and understand the data's

provenance, where it is being sourced from, and how it is going to

be used. Data provenance is key for ensuring that the insights are

useful and trustworthy, he said.

In the dawn of AI-enabled decision making, the notion of data as

a strategic asset is rising at companies such as Morgan Stanley. At

the same time, managing data has grown increasingly complex, the

result of multiple data centers and data storage repositories

across disparate systems, multiple copies of the same data and new

data privacy laws.

The new data emphasis is changing the CIO focus at some

companies from big business applications to the data layer, said

Anil Chakravarthy, chief executive of data management firm

Informatica.

Poor quality, inaccurate and unreliable customer and business

data is preventing companies from leveraging AI, according to

recent studies. About 76% of firms are aiming to extract value from

data they already have, but only 15% said they currently have the

right kind of data needed to achieve that goal, according to

PricewaterhouseCoopers. The firm in January polled about 300

executives at U.S. companies in a range of industries with revenue

of $500 million or more.

More than one petabyte of new data, equivalent to about 1

million gigabytes, is entering Morgan Stanley's systems every

month, according to the bank.

Morgan Stanley's new data group has worked with internal AI

teams on processing and summarizing unstructured data, a broad

category that includes web pages and PDF files and other data not

already housed in rows and columns, related to commercial

real-estate loans. The data group helped develop an application

that allows traders to analyze the risk of a loan without having to

do deeper analysis manually.

Another AI system developed with help from the group analyzes

transactions that can indicate fraudulent behavior. This represents

a major shift in the way AI can help humans process massive amounts

of data that otherwise couldn't be analyzed, Ms. Wetmur said. "In

the past, you couldn't consume the data in the speed that was

needed to get these insights," she said.

Separately, Ms. Wetmur has been working on data projects to

speed up the time it takes to test various applications before

deployment. To do that, her team sought technology from Redwood

City, Calif.-based Delphix Corp., a vendor that allows companies to

virtualize, secure and manage data.

Delphix's technology allows data to exist in one virtual copy

for each group that needs it so that changes can be made on a

single copy in real time. This significantly increases the speed

and delivery of application code, Ms. Wetmur said. Application

testers can now have access to accurate, up-to-date data in minutes

instead of the 10 to 12 hours it would take through traditional

methods of copying data, which can be slow and expensive and

require manual processes.

So-called data virtualization is also more cost-efficient

because it reduces the need to provision new servers, hardware or

databases. In addition, the technology helps drive down

infrastructure costs, because the bank isn't storing as many

traditional database copies that take up massive amounts of storage

space.

Write to Sara Castellanos at sara.castellanos@wsj.com

(END) Dow Jones Newswires

April 22, 2019 05:14 ET (09:14 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

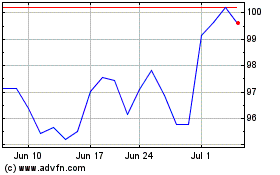

Morgan Stanley (NYSE:MS)

Historical Stock Chart

From Mar 2024 to Apr 2024

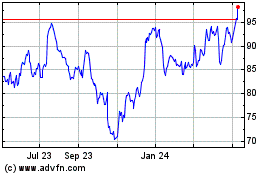

Morgan Stanley (NYSE:MS)

Historical Stock Chart

From Apr 2023 to Apr 2024