Google Quietly Disbanded Another AI Review Board Following Disagreements

April 14 2019 - 10:29AM

Dow Jones News

By Parmy Olson

LONDON -- Google is disbanding a panel here to review its

artificial-intelligence work in health care, people familiar with

the matter say, as disagreements about its effectiveness dogged one

of the tech industry's highest-profile efforts to govern

itself.

The Alphabet Inc. unit is struggling with how best to set

guidelines for its sometimes-sensitive work in AI -- the ability

for computers to replicate tasks that only humans could do in the

past. It also highlights the challenges Silicon Valley faces in

setting up self-governance systems as governments around the world

scrutinize issues ranging from privacy and consent to the growing

influence of social media and screen addiction among children.

AI has recently become a target in that stepped-up push for

oversight as some sensitive decision-making -- including employee

recruitment, health-care diagnoses and law-enforcement profiling --

is increasingly being outsourced to algorithms. The European

Commission is proposing a set of AI ethical guidelines and

researchers have urged companies to adopt similar rules. But

industry efforts to conduct such oversight in-house have been

mixed.

Earlier this month, for instance, Google unveiled a

high-profile, global, independent ethics council to guide it on the

responsible development of all of its AI-related research and

products. A week later, it disbanded the council following an

outpouring of protests about the panel's makeup.

In a post earlier this month, Kent Walker, Google's senior vice

president for global affairs, said the company "was going back to

the drawing board."

Months earlier, in late 2018, Google began to wind down another

independent panel set up to do the same thing -- this time for a

division in the U.K. doing AI work related to health care. At the

time, Google said it was rethinking that board because of a

reorganization in its health-care-focused businesses.

But the move also came amid disagreements between panel members

and DeepMind, Google's U.K.-based AI research unit, according to

people familiar with the matter. Those differences centered on the

review panel's ability to access information about research and

products, the binding power of their recommendations and the amount

of independence that DeepMind could maintain from Google, according

to these people.

A spokeswoman for DeepMind's health-care unit in the U.K.

declined to comment specifically about the board's deliberations.

After the reorganization, the company found that the board, called

the Independent Review Panel, was "unlikely to be the right

structure in the future."

Google bought DeepMind in 2014, promising it a degree of

autonomy to pursue its research on artificial intelligence. In

2016, DeepMind set up a special unit called DeepMind Health to

focus on health-care-related opportunities. At the same time,

DeepMind co-founder Mustafa Suleyman unveiled a board of nine

veterans of government and industry, drawn from the arts, sciences

and technology sectors, to meet once a quarter and scrutinize its

work with the U.K.'s publicly funded health service. Among its

tasks, the group had to produce a public annual report.

Two months after its founding, DeepMind Health admitted missteps

in accessing records of 1.6 million patients in the U.K. without

proper disclosure from the partnering hospital to patients. The

board was praised for going on to publicly raise concerns in its

annual report about the lack of clarity around that data-sharing

agreement, and for recommending that DeepMind Health publish all

future contracts with public health providers, a recommendation the

division complied with.

But internally, some board members chafed at their inability to

review the full extent of the group's AI research and the unit's

strategic plans, according to the people familiar with the matter.

Members of the board weren't asked to sign nondisclosure agreements

about the information they received. Some directors felt that

limited the amount of information the company shared with them and

thus the board's effectiveness, according to one person.

Not all board members felt cut out. "We were told a huge amount

of stuff and trusted to be responsible with it," said Julian

Hubbert, a former British lawmaker who was on the review board.

Tensions increased when DeepMind Health told the independent

board that Google was taking a more hands-on approach to running

the unit, according to these people. In November, DeepMind Health

announced that one of its best-known projects, an app called

Streams that helps hospital doctors in the U.K. detect kidney

disease, would be run from Google's Mountain View, Calif.,

headquarters. That transition is still in progress.

Google said it would build the app into an "AI-powered assistant

for nurses and doctors everywhere." That caused concern in public

health and privacy circles because of previous assurances from

Google and DeepMind that the two wouldn't share health records.

DeepMind Health was renamed Google Health, becoming part of an

umbrella division uniting Google's other health-focused units like

health-tracking platform Google Fit and Verily, a life-sciences

research arm.

Inside the review board, many directors felt blindsided,

according to people familiar with the matter. Some directors

complained they could have played a helpful role in explaining the

change of control of the Streams app to the public if given earlier

insight.

The review panel still plans to publish a final "lessons

learned" report, according to a person familiar with the matter,

which will make recommendations about how better to set up such

boards in the future.

(END) Dow Jones Newswires

April 14, 2019 10:14 ET (14:14 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

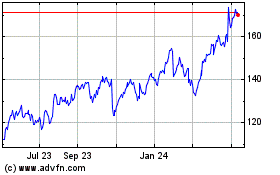

Alphabet (NASDAQ:GOOG)

Historical Stock Chart

From Mar 2024 to Apr 2024

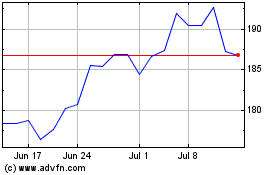

Alphabet (NASDAQ:GOOG)

Historical Stock Chart

From Apr 2023 to Apr 2024