Flaw in Facebook's Messenger Kids Exposed Children to Unauthorized Chats

July 23 2019 - 11:44AM

Dow Jones News

By Sarah E. Needleman

A technical error recently allowed children on a Facebook Inc.

messaging app to interact with users who weren't approved by their

parents, the latest misstep that further dents the company's track

record on privacy and security.

Messenger Kids launched in late 2017 as a stand-alone app that

allows children between the ages of 6 and 12 to send messages and

videos to contacts their parents signed off on. Facebook hasn't

released a public statement on the error, but a spokesman confirmed

that it notified thousands of parents of children who had been

included in the compromised chats and said those conversations had

been turned off.

The incident was first reported by The Verge, a technology news

website.

Facebook has been under pressure from privacy advocates who

argue Messenger Kids is a danger to its young users and should be

shut down. Last year several privacy-advocacy groups asked the

Federal Trade Commission to investigate the tech giant for

potentially violating federal laws governing children's privacy

online. In January, Campaign for a Commercial-Free Childhood said

it joined 14 other groups in sending a letter to Facebook Chief

Executive Mark Zuckerberg for a second time urging the

discontinuation of Messenger Kids.

The latest issue also comes as the FTC is expected as soon as

this week to announce a settlement with Facebook that includes a

roughly $5 billion fine over its user-privacy practices. The agency

is also expected to impose a mandate to create an internal privacy

team for vetting major new products.

Facebook said when it unveiled Messenger Kids that the app was

developed in response to growing safety concerns from parents whose

children wanted to interact online. It drew criticism from some

child-development experts, though, who argued that the app could

endanger children by exposing them to inappropriate messages and

getting them hooked on social media.

Other social platforms have also created products for children

in recent years, such as Alphabet Inc.'s YouTube.

The FTC is also expected to soon announce a settlement with

YouTube over alleged violations of children's privacy protections

after privacy advocates called on the agency to remove all content

on the video platform directed at kids and impose tens of billions

of dollars in fines.

Earlier this year, the FTC announced a $5.7 million settlement

with TikTok over allegations that the social-media app illegally

collected personal information from children. The agency, which

said the TikTok fine was the largest civil penalty assessed in a

children's privacy case, claimed that TikTok violated a law that

requires websites and online services aimed at children to obtain

parental consent before collecting personal information from anyone

under the age of 13. TikTok in a blog post said it was committed to

protecting its users' data and that it created tools for parents to

protect their teens and enabled additional privacy settings.

Write to Sarah E. Needleman at sarah.needleman@wsj.com

(END) Dow Jones Newswires

July 23, 2019 11:29 ET (15:29 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

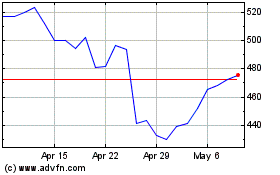

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Mar 2024 to Apr 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Apr 2023 to Apr 2024