EU Backs Fines for Tech Firms Over Terror Propaganda -- Update

September 12 2018 - 9:12AM

Dow Jones News

By Sam Schechner

The European Union is proposing massive fines for online

providers that aren't fast enough in removing terrorist content

from their services, raising pressure on big tech firms like

Facebook Inc. and Alphabet Inc.'s Google that have backed voluntary

approaches.

The bloc's executive arm Wednesday proposed new legislation that

would create a legal obligation for any online service to remove

terrorist content within an hour of being notified of its presence,

and to install automated systems to prevent removed content from

popping up again.

In March, the EU issued new guidelines ordering faster content

removal with the explicit threat of introducing legislation if

actions weren't taken fast enough.

"Systematic failures" to remove content within one hour would

expose companies to fines rising to a maximum of 4% of their

world-wide revenue for the prior year, according to the proposal.

For Alphabet that would be a maximum of $4.43 billion and for

Facebook it would be $1.63 billion.

"One hour is the decisive time window, when the greatest damage

can take place," said European Commission President Jean-Claude

Juncker during a speech Wednesday in the European Parliament.

The proposal requires approval from the EU's parliament and

member states to become law.

The imposition of obligations and heavy fines is a starkly new

approach for the EU, which is under pressure from member states to

act. Until now, the EU has asked for voluntary cooperation from

tech companies to speed up their removal of terrorist content from

their services.

Tech firms said Wednesday that they support the EU's goal of

rapidly removing all terrorist content from their services. Google

and Facebook both said they have invested heavily in using

artificial intelligence tools to flag potential terrorist content,

and at times to remove it automatically -- particularly in the case

of content that had been previously removed.

Google says its automated tools flag violent extremist YouTube

videos so quickly that over half of those it removed in the first

quarter had been seen fewer than 10 times. Facebook last fall said

that 99% of the material it removes from Islamic State and al Qaeda

is blocked before it is seen by any users.

The EU said in January that big tech companies that were part of

its voluntary code of conduct companies had removed 70% of the

content notified to them by European authorities within 24 hours,

up from just 28% in mid 2016.

But political pressure has been rising from EU member states,

such as the U.K., France and Italy, to move faster -- and to make

sure smaller tech platforms are obliged to comply as well.

EU officials also want to get ahead of plans in some member

countries to implement their own rules holding tech firms

responsible. Already, Germany requires social-media companies like

Facebook and Twitter Inc. to delete illegal content -- ranging from

libel to terrorist content -- or face fines of up to EUR50

million.

Separately, the EU's executive arm made another legislative

proposal Wednesday to better safeguard its elections from foreign

interference and online manipulation. The new rules would make

political parties and organizations liable for fines of up to 5% of

their annual budget if they are found in violation of EU privacy

laws in an effort to influence the outcome of EU elections.

--Valentina Pop contributed to this article.

Write to Sam Schechner at sam.schechner@wsj.com

(END) Dow Jones Newswires

September 12, 2018 08:57 ET (12:57 GMT)

Copyright (c) 2018 Dow Jones & Company, Inc.

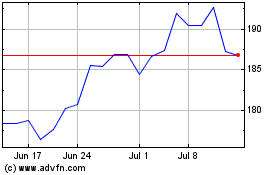

Alphabet (NASDAQ:GOOG)

Historical Stock Chart

From Mar 2024 to Apr 2024

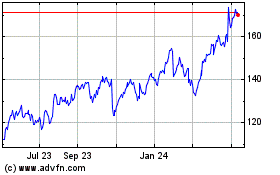

Alphabet (NASDAQ:GOOG)

Historical Stock Chart

From Apr 2023 to Apr 2024