Google Says It Won't Allow Its Artificial Intelligence in Military Weapons

June 07 2018 - 3:31PM

Dow Jones News

By Douglas MacMillan

Google won't allow its artificial-intelligence products to be

used in military weapons, the company said Thursday, as it tries to

balance its "Don't Be Evil" mantra with the wide-ranging

applications of its technology.

In a new 8,000-word set of ethical principles and guidelines,

Google outlined how it plans to manage -- and in some cases limit

-- the application of artificial intelligence, a powerful and

emerging set of technologies that Google views as key to its

growth.

Google, the primary business unit of Alphabet Inc., has recently

come under criticism from its own employees for supplying

image-recognition technology to the U.S. Department of Defense, in

a partnership called Project Maven. Google told employees earlier

this month it wouldn't seek to renew its contract for Project

Maven, a person familiar with the matter said at the time, and that

decision in turn was blasted by some who said the company shouldn't

be conflicted about supporting national security.

Google's artificial intelligence also recently generated public

alarm after the company demonstrated a robotic voice that can trick

humans into thinking it is real.

Google is having to expand its definition of ethics as its

technology seeps more and more into the institutions of public

life, from scientific research to military intelligence.

"These are not theoretical concepts," Google CEO Sundar Pichai

said in the blog post sharing the new principles. "They are

concrete standards that will actively govern our research and

product development and will impact our business decisions."

Write to Douglas MacMillan at douglas.macmillan@wsj.com

(END) Dow Jones Newswires

June 07, 2018 15:16 ET (19:16 GMT)

Copyright (c) 2018 Dow Jones & Company, Inc.

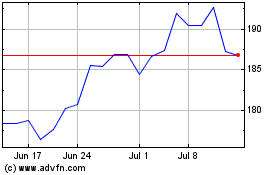

Alphabet (NASDAQ:GOOG)

Historical Stock Chart

From Mar 2024 to Apr 2024

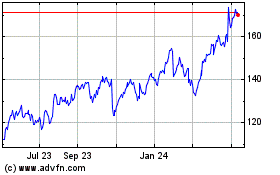

Alphabet (NASDAQ:GOOG)

Historical Stock Chart

From Apr 2023 to Apr 2024