By Christopher Mims

The mountain of evidence piling up this week exposes the rot at

the core of Facebook Inc.

Facebook's troubling relationship with personal data, and the

way that data has been repeatedly exploited, show the precarious

nature of a business dependent on knowing everything it can about

its own users. For a company whose very existence depends on its

users turning up regularly, recent events threaten to give people a

reason to reduce the amount they share on Facebook -- or leave it

all together.

The latest revelations are all related to this: Facebook was,

for a time, a vehicle for exfiltrating massive amounts of data

about its users to developers and data miners of every stripe.

Outside developers could build games, quizzes and other apps

that funneled personal information from accounts of users who

willingly installed them, as well as pretty much everyone who was

their friend.

Facebook allowed this data access, hoping to build a business

like Apple's iPhone App Store. But the collection of Facebook data

by outside developers became such a concern that Facebook

eventually restricted the practice. In cases where Facebook

discovered developers were using that data outside of

Facebook-approved apps, the company demanded those developers

delete the data.

Cambridge Analytica, the data-analysis firm that is suddenly all

over the news, has worked for a number of political clients

including the Trump campaign. It allegedly obtained data from the

makers of one of these apps and improperly kept the data for years,

despite telling the social network the records were destroyed.

"When developers create apps that ask for certain information

from people, we conduct a review to identify policy violations and

to assess whether the app has a legitimate use for the data,"

according to a statement from Justin Osofsky, Facebook's vice

president of global operations. "Three years ago, we changed the

product so that developers can't access the information of people's

friends."

A Facebook spokeswoman says the company continues to improve its

product and policies to prevent further abuse.

In the midst of this new scandal, we've been reminded that

Facebook is having internal debates over how to handle revelations

that Russians used the site to influence the 2016 presidential

election. As the turmoil builds, politicians and regulators in the

U.S. and Europe demand that Facebook make a full accounting of the

abuse of its often-mysterious platform.

It won't be long before Facebook's soul-searching becomes more

than an occasion to self-police and prompts users and regulators to

act on their own.

A Troubling History

Again and again, we've seen two disturbing problems throughout

Facebook's history. The first is that the company is unable to

anticipate the ways its platform, and the incredibly powerful trove

of sensitive data it produces, can be misused. In 2007, it was the

way Facebook's Beacon advertising system shared users' shopping

behavior and, indirectly, their life choices, with their friends

and family.

Personalization in advertising is sometimes nearly

indistinguishable from surveillance, and personalization is at the

heart of how Facebook makes money and captures so much of the

online advertising pie.

The second recurring problem with Facebook, only recently made

apparent, is that the company has a powerful, often negative effect

on our psychology. A variety of studies have shown that the way

Facebook encourages people to passively consume friends' posts can

make them unhappy. Facebook has admitted this is the case, but says

it has modified its algorithm to encourage other kinds of sharing

that, at least in theory, are better at positively connecting

people.

Other work has shown that Facebook has the power to reinforce

our biases. We think that, because our friends online espouse a

view that we share, it's what the majority of the population

believes. The company has contested this.

By virtue of using algorithms to target the most "engaging"

content, including lucrative ads, Facebook and its ilk have become

vehicles for spreading disinformation and sowing division.

Earlier this year, in an act of contrition, Facebook suggested a

pivot toward individual interactions and groups would be of greater

value, psychological and otherwise, to its users. But it's now

apparent that even its group features are fraught with the same

spammers and potential influence operations that bedeviled its news

feed.

"[Facebook Groups] are how Facebook radicalizes everyday

Americans," says Renee Diresta, an researcher and analyst at Data

for Democracy, an independent group of data scientists. She says

Facebook's algorithm for recommending groups pushes someone

interested in, for example, the antivaccination movement into

groups that espouse extreme political ideologies. "It's precisely

because Groups facilitate trust between participants and a feeling

of belonging and camaraderie that they're very powerful tools in

the wrong hands," she says.

In light of these issues -- and particularly the alleged misuse

of data that has pummeled Facebook's stock and reputation -- the

company's options are limited. And any potential solutions could

have a significant impact on the company's bottom line.

It could spend hundreds of millions of dollars to employ human

moderators to police potential abuse and misuse. It could hand over

its data to outside researchers, who could independently study the

impact on society. It could overhaul its data strategy to radically

shrink the amount of data it gathers and stores -- and

monetizes.

And if it doesn't fix itself quickly, Facebook could face

intrusive regulation, and even antitrust litigation.

(END) Dow Jones Newswires

March 20, 2018 17:20 ET (21:20 GMT)

Copyright (c) 2018 Dow Jones & Company, Inc.

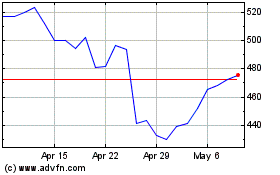

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Mar 2024 to Apr 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Apr 2023 to Apr 2024